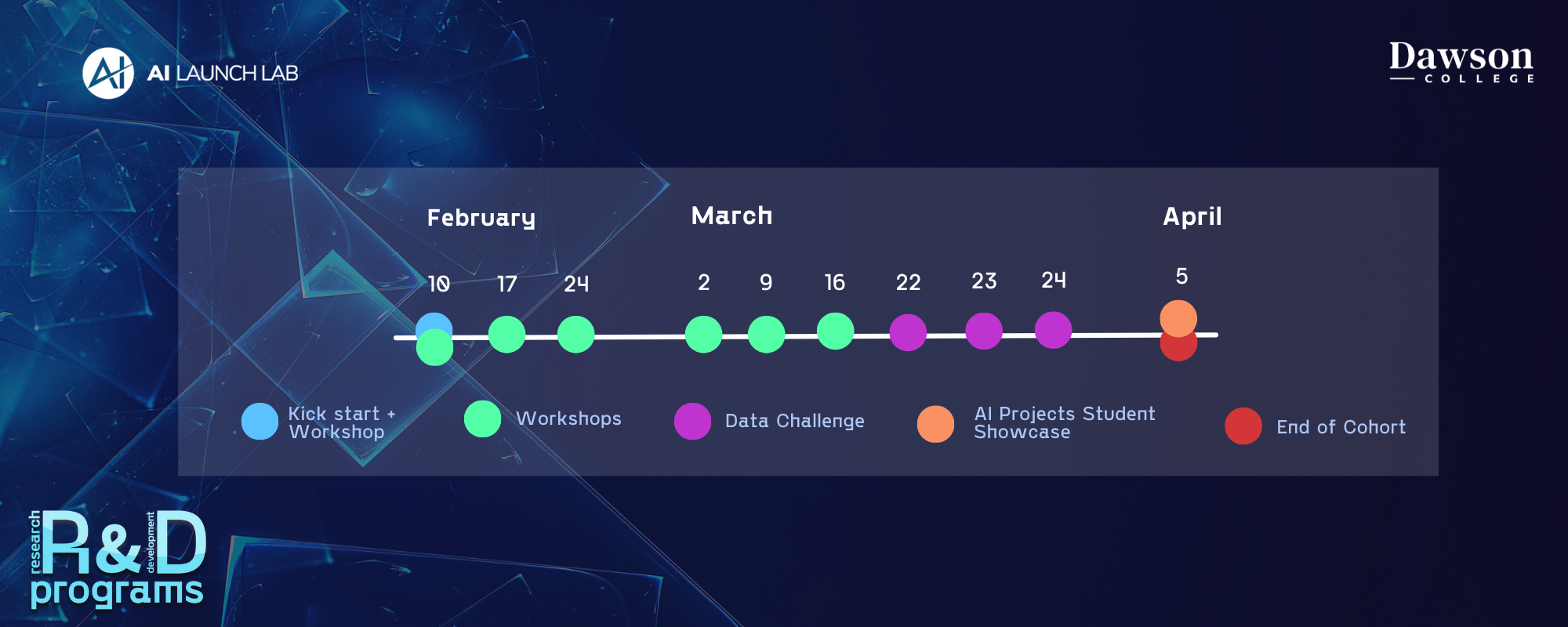

Program Timeline

AI Projects for Winter Cohort 2024

AI Quiz Generator

Our project tackles the challenge of transforming factual statements into questions to assist in the creation of educational resources like quizzes and flashcards, a task that can be time-consuming and labor-intensive for educators. We employed the google-t5/t5-small model, training it on the QA2D dataset from domenicrosati, which consists of factual statements along with their corresponding questions and answers, after preprocessing to remove null values, duplicates, and outliers. Training involved four epochs on a dataset of 63,911 samples using a learning rate of 2e-5 on an Nvidia GTX 1080 GPU, leveraging the Pytorch trainer from the HuggingFace transformers API. The performance of our model was evaluated using metrics such as training and evaluation loss, and the ROUGE1 metric, confirming the model’s efficacy in generating accurate questions from declarative statements. Furthermore, we explored ethical considerations and the implementation of our model in applications ranging from simple question generators to advanced multiple-choice question generators.

Carbon Emission Predictor

Our AI project addresses the issue of people’s unawareness of their carbon emissions by developing a model to estimate individual carbon footprints. We utilized the “Individual Carbon Footprint Calculation” dataset from Kaggle, but faced challenges with incomplete data, leading to the removal of a column. Initial tests with linear regression showed promising closeness to actual emissions, yet the model’s performance metrics (MSE, MAE, R^2) were unsatisfactorily low, around 0.5. Attempts to improve accuracy with logistic regression proved too inaccurate for practical use. Going forward, we plan to refine our model’s accuracy by either pruning problematic data or enriching our dataset to enhance the predictive correlations.

Combating Heart Disease with ML

Our team embarked on a project to develop an AI-powered heart disease prediction model, combining our expertise in health sciences, computer science, and mathematics. The project involved acquiring a prepared dataset, using machine learning algorithms to build and refine our model, and emphasizing ethical considerations in AI applications. Throughout the process, we focused on combating biases, enhancing our model’s accuracy with various techniques, and underlining the importance of ethical guidelines. Our work not only showcased the potential of AI in healthcare but also stressed the need for ongoing refinement and responsible AI practices.

AI Cubism : Neural network image generating

Our project, “Cubism AI,” focuses on developing an AI model capable of transforming standard images into cubism-styled artworks. We began by collecting cubism artworks from the WikiArt website, which serves as the training data for our model. Ethical considerations were addressed to ensure the AI enhances artistic creativity without replacing the artist’s unique role. The model itself utilizes the VGG19 architecture for image recognition, featuring steps like feature extraction and optimization. Throughout the project, we navigated challenges related to model parameters and computational resources, identifying areas for future improvements such as batch processing and optimization of model settings.

Disease Diagnosis Medical Image Classification

Our team embarked on an AI project to automate disease detection through medical X-ray images, broadening our scope from cancer to include various diseases, to streamline doctor workflows and expedite patient diagnoses. Utilizing pre-trained models like VGG16 and ResNet50, we faced challenges such as managing a large dataset from Dhaka’s PACS system, which included 12,446 images, and combating computational and overfitting issues. Our CNN model was specifically tailored for classifying kidney-related conditions, ensuring patient data anonymity to uphold ethical standards. Future goals involve expanding our dataset for broader demographic representation and testing the model’s efficacy across various diseases to enhance its global applicability and accuracy.

Driver Drowsiness Detection

Driver drowsiness poses a significant risk to road safety, often leading to accidents and fatalities. To address this, Convolutional Neural Networks (CNNs) are utilized for their ability to analyze visual data and recognize facial cues indicative of drowsiness. Our model, trained on over 41,790 RGB images, categorizes states into Drowsy and Non-Drowsy, using real-time video processing to detect signs like eye closure and head nods, and providing immediate feedback. However, implementing this CNN-based detection faces challenges such as ensuring real-time processing, maintaining accuracy, and adapting to variable conditions like lighting and individual driver features. Nevertheless, this CNN approach shows substantial potential in enhancing road safety, underscoring the need for ongoing research and development.

TradeMind Stock Analysis

TradeMind is dedicated to becoming an essential stock analytics platform, designed to assist both seasoned and novice investors in navigating the complexities of the stock market. Our mission is to enhance your financial portfolio and future wealth by leveraging artificial intelligence, particularly through polynomial regression analysis. This technique models the relationship between dates and stock closing prices, providing a sophisticated tool to predict future trends based on historical S&P 500 stock data from 2013 to 2018. Our platform, accessible via a REST API and featuring a custom-built React frontend, offers insightful predictions to help you make informed investment decisions.

TweetQuake – Disater Response Message Generation

TweetQuake uses machine learning to categorize tweets related to disasters. Key steps include searching for a suitable dataset, cleaning the data, and organizing for analysis. We found that the fine-tuning of the model suggests increasing the number of training epochs, dividing the dataset into appropriate batch sizes, and adjusting the learning rate and optimizers for better adaptability. Future steps involve implementing the model on a website for categorizing texts with multiple labels and enhancing the parsing of web-scraped data